Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Download Free PDF

Creswell, J. W. (2014). Research Design: Qualitative, Quantitative and Mixed Methods Approaches (4th ed.). Thousand Oaks, CA: Sage

The book Research Design: Qualitative, Quantitative and Mixed Methods Approaches by Creswell (2014) covers three approaches-qualitative, quantitative and mixed methods. This educational book is informative and illustrative and is equally beneficial for students, teachers and researchers. Readers should have basic knowledge of research for better understanding of this book. There are two parts of the book. Part 1 (chapter 1-4) consists of steps for developing research proposal and part II (chapter 5-10) explains how to develop a research proposal or write a research report. A summary is given at the end of every chapter that helps the reader to recapitulate the ideas. Moreover, writing exercises and suggested readings at the end of every chapter are useful for the readers. Chapter 1 opens with-definition of research approaches and the author gives his opinion that selection of a research approach is based on the nature of the research problem, researchers' experience and the audience of the study. The author defines qualitative, quantitative and mixed methods research. A distinction is made between quantitative and qualitative research approaches. The author believes that interest in qualitative research increased in the latter half of the 20th century. The worldviews, Fraenkel, Wallen and Hyun (2012) and Onwuegbuzie and Leech (2005) call them paradigms, have been explained. Sometimes, the use of language becomes too philosophical and technical. This is probably because the author had to explain some technical terms.

Related topics

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Learning Goals

- Learn the fundamental tenets of empiricism.

Exploring Experimental Psychology

What Is Science?

Some people are surprised to learn that psychology is a science. They generally agree that astronomy, biology, and chemistry are sciences, but wonder what psychology has in common with these other fields. What all sciences have in common is a general approach to understanding the natural world. Psychology is a science because it takes this same general approach to understanding one aspect of the natural world: human behavior.

Features of Science

The general scientific approach has three fundamental features (Stanovich, 2010). The first is systematic empiricism . Empiricism refers to learning based on observation, and scientists learn about the natural world systematically, by carefully planning, making, recording, and analyzing observations of it. As we will see, logical reasoning and even creativity play important roles in science too, but scientists are unique in their insistence on checking their ideas about the way the world is against their systematic observations.

The second feature of the scientific approach—which follows in a straightforward way from the first—is that it is concerned with empirical questions . These are questions about the way the world actually is and, therefore, can be answered by systematically observing it. The question of whether women talk more than men is empirical in this way. Either women really do talk more than men or they do not, and this can be determined by systematically observing how much women and men actually talk. There are many interesting and important questions that are not empirically testable and that science cannot answer. Among them are questions about values—whether things are good or bad, just or unjust, or beautiful or ugly, and how the world ought to be. So although the question of whether a stereotype is accurate or inaccurate is an empirically testable one that science can answer, the question of whether it is wrong for people to hold inaccurate stereotypes is not. Similarly, the question of whether criminal behavior has a genetic component is an empirical question, but the question of what should be done with people who commit crimes is not. It is especially important for researchers in psychology to be mindful of this distinction.

The third feature of science is that it creates public knowledge. After asking their empirical questions, making their systematic observations, and drawing their conclusions, scientists publish their work. This usually means writing an article for publication in a professional journal, in which they put their research question in the context of previous research, describe in detail the methods they used to answer their question, and clearly present their results and conclusions. Publication is an essential feature of science for two reasons. One is that science is a social process—a large-scale collaboration among many researchers distributed across both time and space. Our current scientific knowledge of most topics is based on many different studies conducted by many different researchers who have shared their work with each other over the years. The second is that publication allows science to be self-correcting. Individual scientists understand that despite their best efforts, their methods can be flawed and their conclusions incorrect. Publication allows others in the scientific community to detect and correct these errors so that, over time, scientific knowledge increasingly reflects the way the world actually is.

Science Versus Pseudoscience

Pseudoscience refers to activities and beliefs that are claimed to be scientific by their proponents—and may appear to be scientific at first glance—but are not. Any "science" that lacks one of the three previously mentioned features of science is considered pseudoscience. For example, graphology (the "science" of determining a person's personality traits by analyzing the person's handwriting) is considered a pseudoscience because it lacks systematic empiricism...either there is no relevant scientific research supporting the idea or there IS relevant scientific research that discredits it or contradicts is, but it is ignored. The idea might also lack public knowledge. People who promote the beliefs or activities might claim to have conducted scientific research but never publish that research in a way that allows others to evaluate it.

A set of beliefs and activities might also be pseudoscientific because it does not address empirical questions. The philosopher Karl Popper was especially concerned with this idea (Popper, 2002). He argued more specifically that any scientific claim must be expressed in such a way that there are observations that would—if they were made—count as evidence against the claim. In other words, scientific claims must be falsifiable . The claim that women talk more than men is falsifiable because systematic observations could reveal either that they do talk more than men or that they do not. As an example of an unfalsifiable claim, consider that many people who study extrasensory perception (ESP) and other psychic powers claim that such powers can disappear when they are observed too closely. This makes it so that no possible observation would count as evidence against ESP. If a careful test of a self-proclaimed psychic showed that she predicted the future at better-than-chance levels, this would be consistent with the claim that she had psychic powers. But if she failed to predict the future at better-than-chance levels, this would also be consistent with the claim because her powers can supposedly disappear when they are observed too closely.

Why should we concern ourselves with pseudoscience? There are at least three reasons. One is that learning about pseudoscience helps bring the fundamental features of science—and their importance—into sharper focus. A second is that graphology, psychic powers, astrology, and many other pseudoscientific beliefs are widely held and are promoted on the Internet and through other media sources. Learning what makes them pseudoscientific can help us to identify and evaluate such beliefs and practices when we encounter them. A third reason is that many pseudosciences purport to explain some aspect of human behavior and mental processes, including astrology, graphology (handwriting analysis), and magnet therapy for pain control, so it is important for students of psychology to distinguish their own field clearly from this “pseudopsychology.”

Key Takeaways

· Science is a general way of understanding the natural world. Its three fundamental features are systematic empiricism, empirical questions, and public knowledge.

· Psychology is a science because it takes the scientific approach to understanding human behavior.

· Pseudoscience refers to beliefs and activities that are claimed to be scientific but lack one or more of the three features of science. It is important to distinguish the scientific approach to understanding human behavior from the many pseudoscientific approaches.

People have always been curious about the natural world, including themselves and their behavior. (In fact, this is probably why you are studying psychology in the first place.) Science grew out of this natural curiosity and has become the best way to achieve detailed and accurate knowledge. In fact, almost all of the phenomena and theories that fill psychology textbooks are the products of scientific research.

Research in psychology can be described by a simple cyclical model as pictured below. A research question based on the research literature leads to an empirical study, the results of which are published and become part of the research literature.

This cycle of research applies to both basic research and applied research. Basic research in psychology is conducted primarily for the sake of achieving a more detailed and accurate understanding of human behavior, without necessarily trying to address any particular practical problem. Applied research is conducted primarily to address some practical problem. Research on the effects of cell phone use on driving, for example, was prompted by safety concerns and has led to the enactment of laws to limit this practice. Although the distinction between basic and applied research is convenient, it is not always clear-cut. For example, basic research on sex differences in talkativeness could eventually have an effect on how marriage therapy is practiced, and applied research on the effect of cell phone use on driving could produce new insights into basic processes of perception, attention, and action.

· Research in psychology can be described by a simple cyclical model. A research question based on the research literature leads to an empirical study, the results of which are published and become part of the research literature.

· Basic research is conducted to learn about human behavior for its own sake, and applied research is conducted to solve some practical problem. Both are valuable, and the distinction between the two is not always clear-cut.

Some people wonder whether the scientific approach to psychology is necessary. Can we not reach the same conclusions based on common sense or intuition? Certainly we all have intuitive beliefs about people’s behavior, thoughts, and feelings—and these beliefs are collectively referred to as folk psychology. Although much of our folk psychology is probably reasonably accurate, it is clear that much of it is not. For example, most people believe that anger can be relieved by “letting it out”—perhaps by punching something or screaming loudly. Scientific research, however, has shown that this approach tends to leave people feeling more angry, not less (Bushman, 2002). Likewise, most people believe that no one would confess to a crime that he or she had not committed, unless perhaps that person was being physically tortured. But again, extensive empirical research has shown that false confessions are surprisingly common and occur for a variety of reasons (Kassin & Gudjonsson, 2004). In fact, you have probably received some advice based on "folk psychology." For example, upon hearing the old adage that "opposites attract" you might be tempted to enter a relationship with someone who has very different traits and values than you do. But then, you might hear that "birds of a feather flock together" and think it is better to find someone with more similar traits and values. Which is correct? The only way to answer this question once and for all is to use empiricism and the scientific method. And even then the answer is not as straightforward and simple as we would like!

How Could We Be So Wrong?

How can so many of our intuitive beliefs about human behavior be so wrong? Notice that this is a psychological question, and it just so happens that psychologists have conducted scientific research on it and identified many contributing factors (Gilovich, 1991). One is that forming detailed and accurate beliefs requires powers of observation, memory, and analysis to an extent that we do not naturally possess. It would be nearly impossible to count the number of words spoken by the women and men we happen to encounter, estimate the number of words they spoke per day, average these numbers for both groups, and compare them—all in our heads. This is why we tend to rely on mental shortcuts in forming and maintaining our beliefs. For example, if a belief such as "birds of a feather" is shared by many people, is endorsed by a wise “expert” (like your grandma), AND it makes intuitive sense, we tend to assume it is true. This is compounded by the fact that we then tend to focus on cases that confirm our intuitive beliefs and not on cases that disconfirm them. This is called confirmation bias. For example, once we begin to believe that women are more talkative than men, we tend to notice and remember talkative women and silent men but ignore or forget silent women and talkative men. We also hold incorrect beliefs in part because it would be nice if they were true. For example, many people believe that calorie-reducing diets are an effective long-term treatment for obesity, yet a thorough review of the scientific evidence has shown that they are not (Mann et al., 2007). People may continue to believe in the effectiveness of dieting in part because it gives them hope for losing weight if they are obese or makes them feel good about their own “self-control” if they are not.

Scientists—especially psychologists—understand that they are just as susceptible as anyone else to intuitive but incorrect beliefs. This is why they cultivate an attitude of skepticism. That is, we pause to consider alternatives and to search for evidence (particularly systematically collected empirical evidence) when there is enough at stake to justify doing so. Imagine that you read a magazine article that claims that giving children a weekly allowance is a good way to help them develop financial responsibility. This is an interesting and potentially important claim (especially if you have kids). Taking an attitude of skepticism, however, would mean pausing to ask whether it might be instead that receiving an allowance merely teaches children to spend money—perhaps even to be more materialistic. Taking an attitude of skepticism would also mean asking what evidence supports the original claim. Is the author a scientific researcher? Is any scientific evidence cited? If the issue was important enough, it might also mean turning to the research literature to see if anyone else had studied it.

Because there is often not enough evidence to fully evaluate a belief or claim, scientists also cultivate tolerance for uncertainty. They accept that there are many things that they simply do not know. For example, it turns out that there is no scientific evidence that receiving an allowance causes children to be more financially responsible, nor is there any scientific evidence that it causes them to be materialistic. Although this kind of uncertainty can be problematic from a practical perspective—for example, making it difficult to decide what to do when our children ask for an allowance—it is exciting from a scientific perspective. If we do not know the answer to an interesting and empirically testable question, science may be able to provide the answer.

· People’s intuitions about human behavior, also known as folk psychology, often turn out to be wrong. This is one primary reason that psychology relies on science rather than common sense.

· Researchers in psychology cultivate certain critical-thinking attitudes. One is skepticism. They search for evidence and consider alternatives before accepting a claim about human behavior as true. Another is tolerance for uncertainty. They withhold judgment about whether a claim is true or not when there is insufficient evidence to decide.

Again, psychology is the scientific study of behavior and mental processes. But it is also the application of scientific research to “help people, organizations, and communities function better” (American Psychological Association, 2011). By far the most common and widely known application is the clinical practice of psychology—the diagnosis and treatment of psychological disorders and related problems. Let us use the term clinical practice broadly to refer to the activities of clinical and counseling psychologists, school psychologists, marriage and family therapists, licensed clinical social workers, and others who work with people individually or in small groups to identify and solve their psychological problems. It is important to consider the relationship between scientific research and clinical practice because many students are especially interested in clinical practice, perhaps even as a career.

Psychological disorders and other behavioral problems are part of the natural world. This means that questions about their nature, causes, and consequences are empirically testable and therefore subject to scientific study. As with other questions about human behavior, we cannot rely on our intuition or common sense for detailed and accurate answers. Consider, for example, that dozens of popular books and thousands of websites claim that adult children of alcoholics have a distinct personality profile, including low self-esteem, feelings of powerlessness, and difficulties with intimacy. Although this sounds plausible, scientific research has demonstrated that adult children of alcoholics are no more likely to have these problems than anybody else (Lilienfeld et al., 2010). Similarly, questions about whether a particular psychotherapy works are empirically testable questions that can be answered by scientific research. If a new psychotherapy is an effective treatment for depression, then systematic observation should reveal that depressed people who receive this psychotherapy improve more than a similar group of depressed people who do not receive this psychotherapy (or who receive some alternative treatment). Treatments that have been shown to work in this way are called empirically supported treatments. So not only is it important for scientific research in clinical psychology to continue, but it is also important for clinicians who never conduct a scientific study themselves to be scientifically literate so that they can read and evaluate new research and make treatment decisions based on the best available evidence.

Key Takeaways

· The clinical practice of psychology—the diagnosis and treatment of psychological problems—is one important application of the scientific discipline of psychology.

· Scientific research is relevant to other fields of psychology because it provides detailed and accurate knowledge about relevant human issues and establishes what factors are important in addressing those issues.

American Psychological Association. (2011). About APA. Retrieved from http://www.apa.org/about .

Bushman, B. J. (2002). Does venting anger feed or extinguish the flame? Catharsis, rumination, distraction, anger, and aggressive responding. Personality and Social Psychology Bulletin, 28, 724–731.

Collet, C., Guillot, A., & Petit, C. (2010). Phoning while driving I: A review of epidemiological, psychological, behavioural and physiological studies. Ergonomics, 53, 589–601.

Gilovich, T. (1991). How we know what isn’t so: The fallibility of human reason in everyday life. New York, NY: Free Press.

Hines, T. M. (1998). Comprehensive review of biorhythm theory. Psychological Reports, 83, 19–64.

Kassin, S. M., & Gudjonsson, G. H. (2004). The psychology of confession evidence: A review of the literature and issues. Psychological Science in the Public Interest, 5, 33–67.

Lilienfeld, S. O., Lynn, S. J., Ruscio, J., & Beyerstein, B. L. (2010). 50 great myths of popular psychology. Malden, MA: Wiley-Blackwell.

Mann, T., Tomiyama, A. J., Westling, E., Lew, A., Samuels, B., & Chatman, J. (2007). Medicare’s search for effective obesity treatments: Diets are not the answer. American Psychologist, 62, 220–233.

Norcross, J. C., Beutler, L. E., & Levant, R. F. (Eds.). (2005). Evidence-based practices in mental health: Debate and dialogue on the fundamental questions. Washington, DC: American Psychological Association.

Popper, K. R. (2002). Conjectures and refutations: The growth of scientific knowledge. New York, NY: Routledge.

Stanovich, K. E. (2010). How to think straight about psychology (9th ed.). Boston, MA: Allyn Bacon.

No Alignments yet.

Cite this work

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10 Experimental research

Experimental research—often considered to be the ‘gold standard’ in research designs—is one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity (causality) due to its ability to link cause and effect through treatment manipulation, while controlling for the spurious effect of extraneous variable.

Experimental research is best suited for explanatory research—rather than for descriptive or exploratory research—where the goal of the study is to examine cause-effect relationships. It also works well for research that involves a relatively limited and well-defined set of independent variables that can either be manipulated or controlled. Experimental research can be conducted in laboratory or field settings. Laboratory experiments , conducted in laboratory (artificial) settings, tend to be high in internal validity, but this comes at the cost of low external validity (generalisability), because the artificial (laboratory) setting in which the study is conducted may not reflect the real world. Field experiments are conducted in field settings such as in a real organisation, and are high in both internal and external validity. But such experiments are relatively rare, because of the difficulties associated with manipulating treatments and controlling for extraneous effects in a field setting.

Experimental research can be grouped into two broad categories: true experimental designs and quasi-experimental designs. Both designs require treatment manipulation, but while true experiments also require random assignment, quasi-experiments do not. Sometimes, we also refer to non-experimental research, which is not really a research design, but an all-inclusive term that includes all types of research that do not employ treatment manipulation or random assignment, such as survey research, observational research, and correlational studies.

Basic concepts

Treatment and control groups. In experimental research, some subjects are administered one or more experimental stimulus called a treatment (the treatment group ) while other subjects are not given such a stimulus (the control group ). The treatment may be considered successful if subjects in the treatment group rate more favourably on outcome variables than control group subjects. Multiple levels of experimental stimulus may be administered, in which case, there may be more than one treatment group. For example, in order to test the effects of a new drug intended to treat a certain medical condition like dementia, if a sample of dementia patients is randomly divided into three groups, with the first group receiving a high dosage of the drug, the second group receiving a low dosage, and the third group receiving a placebo such as a sugar pill (control group), then the first two groups are experimental groups and the third group is a control group. After administering the drug for a period of time, if the condition of the experimental group subjects improved significantly more than the control group subjects, we can say that the drug is effective. We can also compare the conditions of the high and low dosage experimental groups to determine if the high dose is more effective than the low dose.

Treatment manipulation. Treatments are the unique feature of experimental research that sets this design apart from all other research methods. Treatment manipulation helps control for the ‘cause’ in cause-effect relationships. Naturally, the validity of experimental research depends on how well the treatment was manipulated. Treatment manipulation must be checked using pretests and pilot tests prior to the experimental study. Any measurements conducted before the treatment is administered are called pretest measures , while those conducted after the treatment are posttest measures .

Random selection and assignment. Random selection is the process of randomly drawing a sample from a population or a sampling frame. This approach is typically employed in survey research, and ensures that each unit in the population has a positive chance of being selected into the sample. Random assignment, however, is a process of randomly assigning subjects to experimental or control groups. This is a standard practice in true experimental research to ensure that treatment groups are similar (equivalent) to each other and to the control group prior to treatment administration. Random selection is related to sampling, and is therefore more closely related to the external validity (generalisability) of findings. However, random assignment is related to design, and is therefore most related to internal validity. It is possible to have both random selection and random assignment in well-designed experimental research, but quasi-experimental research involves neither random selection nor random assignment.

Threats to internal validity. Although experimental designs are considered more rigorous than other research methods in terms of the internal validity of their inferences (by virtue of their ability to control causes through treatment manipulation), they are not immune to internal validity threats. Some of these threats to internal validity are described below, within the context of a study of the impact of a special remedial math tutoring program for improving the math abilities of high school students.

History threat is the possibility that the observed effects (dependent variables) are caused by extraneous or historical events rather than by the experimental treatment. For instance, students’ post-remedial math score improvement may have been caused by their preparation for a math exam at their school, rather than the remedial math program.

Maturation threat refers to the possibility that observed effects are caused by natural maturation of subjects (e.g., a general improvement in their intellectual ability to understand complex concepts) rather than the experimental treatment.

Testing threat is a threat in pre-post designs where subjects’ posttest responses are conditioned by their pretest responses. For instance, if students remember their answers from the pretest evaluation, they may tend to repeat them in the posttest exam.

Not conducting a pretest can help avoid this threat.

Instrumentation threat , which also occurs in pre-post designs, refers to the possibility that the difference between pretest and posttest scores is not due to the remedial math program, but due to changes in the administered test, such as the posttest having a higher or lower degree of difficulty than the pretest.

Mortality threat refers to the possibility that subjects may be dropping out of the study at differential rates between the treatment and control groups due to a systematic reason, such that the dropouts were mostly students who scored low on the pretest. If the low-performing students drop out, the results of the posttest will be artificially inflated by the preponderance of high-performing students.

Regression threat —also called a regression to the mean—refers to the statistical tendency of a group’s overall performance to regress toward the mean during a posttest rather than in the anticipated direction. For instance, if subjects scored high on a pretest, they will have a tendency to score lower on the posttest (closer to the mean) because their high scores (away from the mean) during the pretest were possibly a statistical aberration. This problem tends to be more prevalent in non-random samples and when the two measures are imperfectly correlated.

Two-group experimental designs

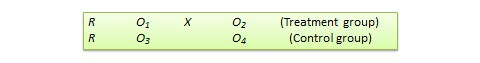

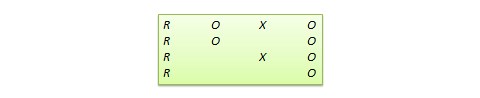

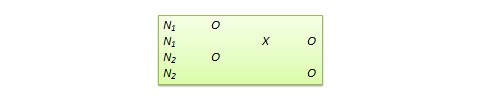

Pretest-posttest control group design . In this design, subjects are randomly assigned to treatment and control groups, subjected to an initial (pretest) measurement of the dependent variables of interest, the treatment group is administered a treatment (representing the independent variable of interest), and the dependent variables measured again (posttest). The notation of this design is shown in Figure 10.1.

Statistical analysis of this design involves a simple analysis of variance (ANOVA) between the treatment and control groups. The pretest-posttest design handles several threats to internal validity, such as maturation, testing, and regression, since these threats can be expected to influence both treatment and control groups in a similar (random) manner. The selection threat is controlled via random assignment. However, additional threats to internal validity may exist. For instance, mortality can be a problem if there are differential dropout rates between the two groups, and the pretest measurement may bias the posttest measurement—especially if the pretest introduces unusual topics or content.

Posttest -only control group design . This design is a simpler version of the pretest-posttest design where pretest measurements are omitted. The design notation is shown in Figure 10.2.

The treatment effect is measured simply as the difference in the posttest scores between the two groups:

The appropriate statistical analysis of this design is also a two-group analysis of variance (ANOVA). The simplicity of this design makes it more attractive than the pretest-posttest design in terms of internal validity. This design controls for maturation, testing, regression, selection, and pretest-posttest interaction, though the mortality threat may continue to exist.

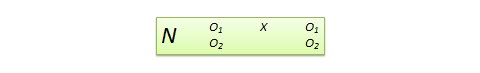

Because the pretest measure is not a measurement of the dependent variable, but rather a covariate, the treatment effect is measured as the difference in the posttest scores between the treatment and control groups as:

Due to the presence of covariates, the right statistical analysis of this design is a two-group analysis of covariance (ANCOVA). This design has all the advantages of posttest-only design, but with internal validity due to the controlling of covariates. Covariance designs can also be extended to pretest-posttest control group design.

Factorial designs

Two-group designs are inadequate if your research requires manipulation of two or more independent variables (treatments). In such cases, you would need four or higher-group designs. Such designs, quite popular in experimental research, are commonly called factorial designs. Each independent variable in this design is called a factor , and each subdivision of a factor is called a level . Factorial designs enable the researcher to examine not only the individual effect of each treatment on the dependent variables (called main effects), but also their joint effect (called interaction effects).

In a factorial design, a main effect is said to exist if the dependent variable shows a significant difference between multiple levels of one factor, at all levels of other factors. No change in the dependent variable across factor levels is the null case (baseline), from which main effects are evaluated. In the above example, you may see a main effect of instructional type, instructional time, or both on learning outcomes. An interaction effect exists when the effect of differences in one factor depends upon the level of a second factor. In our example, if the effect of instructional type on learning outcomes is greater for three hours/week of instructional time than for one and a half hours/week, then we can say that there is an interaction effect between instructional type and instructional time on learning outcomes. Note that the presence of interaction effects dominate and make main effects irrelevant, and it is not meaningful to interpret main effects if interaction effects are significant.

Hybrid experimental designs

Hybrid designs are those that are formed by combining features of more established designs. Three such hybrid designs are randomised bocks design, Solomon four-group design, and switched replications design.

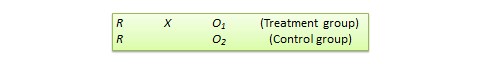

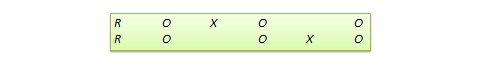

Randomised block design. This is a variation of the posttest-only or pretest-posttest control group design where the subject population can be grouped into relatively homogeneous subgroups (called blocks ) within which the experiment is replicated. For instance, if you want to replicate the same posttest-only design among university students and full-time working professionals (two homogeneous blocks), subjects in both blocks are randomly split between the treatment group (receiving the same treatment) and the control group (see Figure 10.5). The purpose of this design is to reduce the ‘noise’ or variance in data that may be attributable to differences between the blocks so that the actual effect of interest can be detected more accurately.

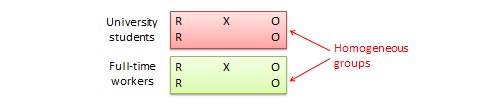

Solomon four-group design . In this design, the sample is divided into two treatment groups and two control groups. One treatment group and one control group receive the pretest, and the other two groups do not. This design represents a combination of posttest-only and pretest-posttest control group design, and is intended to test for the potential biasing effect of pretest measurement on posttest measures that tends to occur in pretest-posttest designs, but not in posttest-only designs. The design notation is shown in Figure 10.6.

Switched replication design . This is a two-group design implemented in two phases with three waves of measurement. The treatment group in the first phase serves as the control group in the second phase, and the control group in the first phase becomes the treatment group in the second phase, as illustrated in Figure 10.7. In other words, the original design is repeated or replicated temporally with treatment/control roles switched between the two groups. By the end of the study, all participants will have received the treatment either during the first or the second phase. This design is most feasible in organisational contexts where organisational programs (e.g., employee training) are implemented in a phased manner or are repeated at regular intervals.

Quasi-experimental designs

Quasi-experimental designs are almost identical to true experimental designs, but lacking one key ingredient: random assignment. For instance, one entire class section or one organisation is used as the treatment group, while another section of the same class or a different organisation in the same industry is used as the control group. This lack of random assignment potentially results in groups that are non-equivalent, such as one group possessing greater mastery of certain content than the other group, say by virtue of having a better teacher in a previous semester, which introduces the possibility of selection bias . Quasi-experimental designs are therefore inferior to true experimental designs in interval validity due to the presence of a variety of selection related threats such as selection-maturation threat (the treatment and control groups maturing at different rates), selection-history threat (the treatment and control groups being differentially impacted by extraneous or historical events), selection-regression threat (the treatment and control groups regressing toward the mean between pretest and posttest at different rates), selection-instrumentation threat (the treatment and control groups responding differently to the measurement), selection-testing (the treatment and control groups responding differently to the pretest), and selection-mortality (the treatment and control groups demonstrating differential dropout rates). Given these selection threats, it is generally preferable to avoid quasi-experimental designs to the greatest extent possible.

In addition, there are quite a few unique non-equivalent designs without corresponding true experimental design cousins. Some of the more useful of these designs are discussed next.

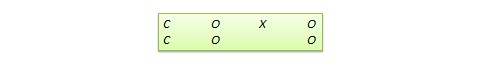

Regression discontinuity (RD) design . This is a non-equivalent pretest-posttest design where subjects are assigned to the treatment or control group based on a cut-off score on a preprogram measure. For instance, patients who are severely ill may be assigned to a treatment group to test the efficacy of a new drug or treatment protocol and those who are mildly ill are assigned to the control group. In another example, students who are lagging behind on standardised test scores may be selected for a remedial curriculum program intended to improve their performance, while those who score high on such tests are not selected from the remedial program.

Because of the use of a cut-off score, it is possible that the observed results may be a function of the cut-off score rather than the treatment, which introduces a new threat to internal validity. However, using the cut-off score also ensures that limited or costly resources are distributed to people who need them the most, rather than randomly across a population, while simultaneously allowing a quasi-experimental treatment. The control group scores in the RD design do not serve as a benchmark for comparing treatment group scores, given the systematic non-equivalence between the two groups. Rather, if there is no discontinuity between pretest and posttest scores in the control group, but such a discontinuity persists in the treatment group, then this discontinuity is viewed as evidence of the treatment effect.

Proxy pretest design . This design, shown in Figure 10.11, looks very similar to the standard NEGD (pretest-posttest) design, with one critical difference: the pretest score is collected after the treatment is administered. A typical application of this design is when a researcher is brought in to test the efficacy of a program (e.g., an educational program) after the program has already started and pretest data is not available. Under such circumstances, the best option for the researcher is often to use a different prerecorded measure, such as students’ grade point average before the start of the program, as a proxy for pretest data. A variation of the proxy pretest design is to use subjects’ posttest recollection of pretest data, which may be subject to recall bias, but nevertheless may provide a measure of perceived gain or change in the dependent variable.

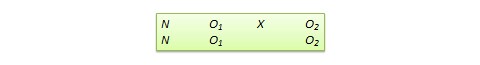

Separate pretest-posttest samples design . This design is useful if it is not possible to collect pretest and posttest data from the same subjects for some reason. As shown in Figure 10.12, there are four groups in this design, but two groups come from a single non-equivalent group, while the other two groups come from a different non-equivalent group. For instance, say you want to test customer satisfaction with a new online service that is implemented in one city but not in another. In this case, customers in the first city serve as the treatment group and those in the second city constitute the control group. If it is not possible to obtain pretest and posttest measures from the same customers, you can measure customer satisfaction at one point in time, implement the new service program, and measure customer satisfaction (with a different set of customers) after the program is implemented. Customer satisfaction is also measured in the control group at the same times as in the treatment group, but without the new program implementation. The design is not particularly strong, because you cannot examine the changes in any specific customer’s satisfaction score before and after the implementation, but you can only examine average customer satisfaction scores. Despite the lower internal validity, this design may still be a useful way of collecting quasi-experimental data when pretest and posttest data is not available from the same subjects.

An interesting variation of the NEDV design is a pattern-matching NEDV design , which employs multiple outcome variables and a theory that explains how much each variable will be affected by the treatment. The researcher can then examine if the theoretical prediction is matched in actual observations. This pattern-matching technique—based on the degree of correspondence between theoretical and observed patterns—is a powerful way of alleviating internal validity concerns in the original NEDV design.

Perils of experimental research

Experimental research is one of the most difficult of research designs, and should not be taken lightly. This type of research is often best with a multitude of methodological problems. First, though experimental research requires theories for framing hypotheses for testing, much of current experimental research is atheoretical. Without theories, the hypotheses being tested tend to be ad hoc, possibly illogical, and meaningless. Second, many of the measurement instruments used in experimental research are not tested for reliability and validity, and are incomparable across studies. Consequently, results generated using such instruments are also incomparable. Third, often experimental research uses inappropriate research designs, such as irrelevant dependent variables, no interaction effects, no experimental controls, and non-equivalent stimulus across treatment groups. Findings from such studies tend to lack internal validity and are highly suspect. Fourth, the treatments (tasks) used in experimental research may be diverse, incomparable, and inconsistent across studies, and sometimes inappropriate for the subject population. For instance, undergraduate student subjects are often asked to pretend that they are marketing managers and asked to perform a complex budget allocation task in which they have no experience or expertise. The use of such inappropriate tasks, introduces new threats to internal validity (i.e., subject’s performance may be an artefact of the content or difficulty of the task setting), generates findings that are non-interpretable and meaningless, and makes integration of findings across studies impossible.

The design of proper experimental treatments is a very important task in experimental design, because the treatment is the raison d’etre of the experimental method, and must never be rushed or neglected. To design an adequate and appropriate task, researchers should use prevalidated tasks if available, conduct treatment manipulation checks to check for the adequacy of such tasks (by debriefing subjects after performing the assigned task), conduct pilot tests (repeatedly, if necessary), and if in doubt, use tasks that are simple and familiar for the respondent sample rather than tasks that are complex or unfamiliar.

In summary, this chapter introduced key concepts in the experimental design research method and introduced a variety of true experimental and quasi-experimental designs. Although these designs vary widely in internal validity, designs with less internal validity should not be overlooked and may sometimes be useful under specific circumstances and empirical contingencies.

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Statistical Design and Analysis of Biological Experiments

Chapter 1 principles of experimental design, 1.1 introduction.

The validity of conclusions drawn from a statistical analysis crucially hinges on the manner in which the data are acquired, and even the most sophisticated analysis will not rescue a flawed experiment. Planning an experiment and thinking about the details of data acquisition is so important for a successful analysis that R. A. Fisher—who single-handedly invented many of the experimental design techniques we are about to discuss—famously wrote

To call in the statistician after the experiment is done may be no more than asking him to perform a post-mortem examination: he may be able to say what the experiment died of. ( Fisher 1938 )

(Statistical) design of experiments provides the principles and methods for planning experiments and tailoring the data acquisition to an intended analysis. Design and analysis of an experiment are best considered as two aspects of the same enterprise: the goals of the analysis strongly inform an appropriate design, and the implemented design determines the possible analyses.

The primary aim of designing experiments is to ensure that valid statistical and scientific conclusions can be drawn that withstand the scrutiny of a determined skeptic. Good experimental design also considers that resources are used efficiently, and that estimates are sufficiently precise and hypothesis tests adequately powered. It protects our conclusions by excluding alternative interpretations or rendering them implausible. Three main pillars of experimental design are randomization , replication , and blocking , and we will flesh out their effects on the subsequent analysis as well as their implementation in an experimental design.

An experimental design is always tailored towards predefined (primary) analyses and an efficient analysis and unambiguous interpretation of the experimental data is often straightforward from a good design. This does not prevent us from doing additional analyses of interesting observations after the data are acquired, but these analyses can be subjected to more severe criticisms and conclusions are more tentative.

In this chapter, we provide the wider context for using experiments in a larger research enterprise and informally introduce the main statistical ideas of experimental design. We use a comparison of two samples as our main example to study how design choices affect an analysis, but postpone a formal quantitative analysis to the next chapters.

1.2 A Cautionary Tale

For illustrating some of the issues arising in the interplay of experimental design and analysis, we consider a simple example. We are interested in comparing the enzyme levels measured in processed blood samples from laboratory mice, when the sample processing is done either with a kit from a vendor A, or a kit from a competitor B. For this, we take 20 mice and randomly select 10 of them for sample preparation with kit A, while the blood samples of the remaining 10 mice are prepared with kit B. The experiment is illustrated in Figure 1.1 A and the resulting data are given in Table 1.1 .

One option for comparing the two kits is to look at the difference in average enzyme levels, and we find an average level of 10.32 for vendor A and 10.66 for vendor B. We would like to interpret their difference of -0.34 as the difference due to the two preparation kits and conclude whether the two kits give equal results or if measurements based on one kit are systematically different from those based on the other kit.

Such interpretation, however, is only valid if the two groups of mice and their measurements are identical in all aspects except the sample preparation kit. If we use one strain of mice for kit A and another strain for kit B, any difference might also be attributed to inherent differences between the strains. Similarly, if the measurements using kit B were conducted much later than those using kit A, any observed difference might be attributed to changes in, e.g., mice selected, batches of chemicals used, device calibration, or any number of other influences. None of these competing explanations for an observed difference can be excluded from the given data alone, but good experimental design allows us to render them (almost) arbitrarily implausible.

A second aspect for our analysis is the inherent uncertainty in our calculated difference: if we repeat the experiment, the observed difference will change each time, and this will be more pronounced for a smaller number of mice, among others. If we do not use a sufficient number of mice in our experiment, the uncertainty associated with the observed difference might be too large, such that random fluctuations become a plausible explanation for the observed difference. Systematic differences between the two kits, of practically relevant magnitude in either direction, might then be compatible with the data, and we can draw no reliable conclusions from our experiment.

In each case, the statistical analysis—no matter how clever—was doomed before the experiment was even started, while simple ideas from statistical design of experiments would have provided correct and robust results with interpretable conclusions.

1.3 The Language of Experimental Design

By an experiment we understand an investigation where the researcher has full control over selecting and altering the experimental conditions of interest, and we only consider investigations of this type. The selected experimental conditions are called treatments . An experiment is comparative if the responses to several treatments are to be compared or contrasted. The experimental units are the smallest subdivision of the experimental material to which a treatment can be assigned. All experimental units given the same treatment constitute a treatment group . Especially in biology, we often compare treatments to a control group to which some standard experimental conditions are applied; a typical example is using a placebo for the control group, and different drugs for the other treatment groups.

The values observed are called responses and are measured on the response units ; these are often identical to the experimental units but need not be. Multiple experimental units are sometimes combined into groupings or blocks , such as mice grouped by litter, or samples grouped by batches of chemicals used for their preparation. More generally, we call any grouping of the experimental material (even with group size one) a unit .

In our example, we selected the mice, used a single sample per mouse, deliberately chose the two specific vendors, and had full control over which kit to assign to which mouse. In other words, the two kits are the treatments and the mice are the experimental units. We took the measured enzyme level of a single sample from a mouse as our response, and samples are therefore the response units. The resulting experiment is comparative, because we contrast the enzyme levels between the two treatment groups.

Figure 1.1: Three designs to determine the difference between two preparation kits A and B based on four mice. A: One sample per mouse. Comparison between averages of samples with same kit. B: Two samples per mouse treated with the same kit. Comparison between averages of mice with same kit requires averaging responses for each mouse first. C: Two samples per mouse each treated with different kit. Comparison between two samples of each mouse, with differences averaged.

In this example, we can coalesce experimental and response units, because we have a single response per mouse and cannot distinguish a sample from a mouse in the analysis, as illustrated in Figure 1.1 A for four mice. Responses from mice with the same kit are averaged, and the kit difference is the difference between these two averages.

By contrast, if we take two samples per mouse and use the same kit for both samples, then the mice are still the experimental units, but each mouse now groups the two response units associated with it. Now, responses from the same mouse are first averaged, and these averages are used to calculate the difference between kits; even though eight measurements are available, this difference is still based on only four mice (Figure 1.1 B).

If we take two samples per mouse, but apply each kit to one of the two samples, then the samples are both the experimental and response units, while the mice are blocks that group the samples. Now, we calculate the difference between kits for each mouse, and then average these differences (Figure 1.1 C).

If we only use one kit and determine the average enzyme level, then this investigation is still an experiment, but is not comparative.

To summarize, the design of an experiment determines the logical structure of the experiment ; it consists of (i) a set of treatments (the two kits); (ii) a specification of the experimental units (animals, cell lines, samples) (the mice in Figure 1.1 A,B and the samples in Figure 1.1 C); (iii) a procedure for assigning treatments to units; and (iv) a specification of the response units and the quantity to be measured as a response (the samples and associated enzyme levels).

1.4 Experiment Validity

Before we embark on the more technical aspects of experimental design, we discuss three components for evaluating an experiment’s validity: construct validity , internal validity , and external validity . These criteria are well-established in areas such as educational and psychological research, and have more recently been discussed for animal research ( Würbel 2017 ) where experiments are increasingly scrutinized for their scientific rationale and their design and intended analyses.

1.4.1 Construct Validity

Construct validity concerns the choice of the experimental system for answering our research question. Is the system even capable of providing a relevant answer to the question?

Studying the mechanisms of a particular disease, for example, might require careful choice of an appropriate animal model that shows a disease phenotype and is accessible to experimental interventions. If the animal model is a proxy for drug development for humans, biological mechanisms must be sufficiently similar between animal and human physiologies.

Another important aspect of the construct is the quantity that we intend to measure (the measurand ), and its relation to the quantity or property we are interested in. For example, we might measure the concentration of the same chemical compound once in a blood sample and once in a highly purified sample, and these constitute two different measurands, whose values might not be comparable. Often, the quantity of interest (e.g., liver function) is not directly measurable (or even quantifiable) and we measure a biomarker instead. For example, pre-clinical and clinical investigations may use concentrations of proteins or counts of specific cell types from blood samples, such as the CD4+ cell count used as a biomarker for immune system function.

1.4.2 Internal Validity

The internal validity of an experiment concerns the soundness of the scientific rationale, statistical properties such as precision of estimates, and the measures taken against risk of bias. It refers to the validity of claims within the context of the experiment. Statistical design of experiments plays a prominent role in ensuring internal validity, and we briefly discuss the main ideas before providing the technical details and an application to our example in the subsequent sections.

Scientific Rationale and Research Question

The scientific rationale of a study is (usually) not immediately a statistical question. Translating a scientific question into a quantitative comparison amenable to statistical analysis is no small task and often requires careful consideration. It is a substantial, if non-statistical, benefit of using experimental design that we are forced to formulate a precise-enough research question and decide on the main analyses required for answering it before we conduct the experiment. For example, the question: is there a difference between placebo and drug? is insufficiently precise for planning a statistical analysis and determine an adequate experimental design. What exactly is the drug treatment? What should the drug’s concentration be and how is it administered? How do we make sure that the placebo group is comparable to the drug group in all other aspects? What do we measure and what do we mean by “difference?” A shift in average response, a fold-change, change in response before and after treatment?

The scientific rationale also enters the choice of a potential control group to which we compare responses. The quote

The deep, fundamental question in statistical analysis is ‘Compared to what?’ ( Tufte 1997 )

highlights the importance of this choice.

There are almost never enough resources to answer all relevant scientific questions. We therefore define a few questions of highest interest, and the main purpose of the experiment is answering these questions in the primary analysis . This intended analysis drives the experimental design to ensure relevant estimates can be calculated and have sufficient precision, and tests are adequately powered. This does not preclude us from conducting additional secondary analyses and exploratory analyses , but we are not willing to enlarge the experiment to ensure that strong conclusions can also be drawn from these analyses.

Risk of Bias

Experimental bias is a systematic difference in response between experimental units in addition to the difference caused by the treatments. The experimental units in the different groups are then not equal in all aspects other than the treatment applied to them. We saw several examples in Section 1.2 .

Minimizing the risk of bias is crucial for internal validity and we look at some common measures to eliminate or reduce different types of bias in Section 1.5 .

Precision and Effect Size

Another aspect of internal validity is the precision of estimates and the expected effect sizes. Is the experimental setup, in principle, able to detect a difference of relevant magnitude? Experimental design offers several methods for answering this question based on the expected heterogeneity of samples, the measurement error, and other sources of variation: power analysis is a technique for determining the number of samples required to reliably detect a relevant effect size and provide estimates of sufficient precision. More samples yield more precision and more power, but we have to be careful that replication is done at the right level: simply measuring a biological sample multiple times as in Figure 1.1 B yields more measured values, but is pseudo-replication for analyses. Replication should also ensure that the statistical uncertainties of estimates can be gauged from the data of the experiment itself, without additional untestable assumptions. Finally, the technique of blocking , shown in Figure 1.1 C, can remove a substantial proportion of the variation and thereby increase power and precision if we find a way to apply it.

1.4.3 External Validity

The external validity of an experiment concerns its replicability and the generalizability of inferences. An experiment is replicable if its results can be confirmed by an independent new experiment, preferably by a different lab and researcher. Experimental conditions in the replicate experiment usually differ from the original experiment, which provides evidence that the observed effects are robust to such changes. A much weaker condition on an experiment is reproducibility , the property that an independent researcher draws equivalent conclusions based on the data from this particular experiment, using the same analysis techniques. Reproducibility requires publishing the raw data, details on the experimental protocol, and a description of the statistical analyses, preferably with accompanying source code. Many scientific journals subscribe to reporting guidelines to ensure reproducibility and these are also helpful for planning an experiment.

A main threat to replicability and generalizability are too tightly controlled experimental conditions, when inferences only hold for a specific lab under the very specific conditions of the original experiment. Introducing systematic heterogeneity and using multi-center studies effectively broadens the experimental conditions and therefore the inferences for which internal validity is available.

For systematic heterogeneity , experimental conditions are systematically altered in addition to the treatments, and treatment differences estimated for each condition. For example, we might split the experimental material into several batches and use a different day of analysis, sample preparation, batch of buffer, measurement device, and lab technician for each batch. A more general inference is then possible if effect size, effect direction, and precision are comparable between the batches, indicating that the treatment differences are stable over the different conditions.

In multi-center experiments , the same experiment is conducted in several different labs and the results compared and merged. Multi-center approaches are very common in clinical trials and often necessary to reach the required number of patient enrollments.

Generalizability of randomized controlled trials in medicine and animal studies can suffer from overly restrictive eligibility criteria. In clinical trials, patients are often included or excluded based on co-medications and co-morbidities, and the resulting sample of eligible patients might no longer be representative of the patient population. For example, Travers et al. ( 2007 ) used the eligibility criteria of 17 random controlled trials of asthma treatments and found that out of 749 patients, only a median of 6% (45 patients) would be eligible for an asthma-related randomized controlled trial. This puts a question mark on the relevance of the trials’ findings for asthma patients in general.

1.5 Reducing the Risk of Bias

1.5.1 randomization of treatment allocation.

If systematic differences other than the treatment exist between our treatment groups, then the effect of the treatment is confounded with these other differences and our estimates of treatment effects might be biased.

We remove such unwanted systematic differences from our treatment comparisons by randomizing the allocation of treatments to experimental units. In a completely randomized design , each experimental unit has the same chance of being subjected to any of the treatments, and any differences between the experimental units other than the treatments are distributed over the treatment groups. Importantly, randomization is the only method that also protects our experiment against unknown sources of bias: we do not need to know all or even any of the potential differences and yet their impact is eliminated from the treatment comparisons by random treatment allocation.

Randomization has two effects: (i) differences unrelated to treatment become part of the ‘statistical noise’ rendering the treatment groups more similar; and (ii) the systematic differences are thereby eliminated as sources of bias from the treatment comparison.

Randomization transforms systematic variation into random variation.

In our example, a proper randomization would select 10 out of our 20 mice fully at random, such that the probability of any one mouse being picked is 1/20. These ten mice are then assigned to kit A, and the remaining mice to kit B. This allocation is entirely independent of the treatments and of any properties of the mice.

To ensure random treatment allocation, some kind of random process needs to be employed. This can be as simple as shuffling a pack of 10 red and 10 black cards or using a software-based random number generator. Randomization is slightly more difficult if the number of experimental units is not known at the start of the experiment, such as when patients are recruited for an ongoing clinical trial (sometimes called rolling recruitment ), and we want to have reasonable balance between the treatment groups at each stage of the trial.

Seemingly random assignments “by hand” are usually no less complicated than fully random assignments, but are always inferior. If surprising results ensue from the experiment, such assignments are subject to unanswerable criticism and suspicion of unwanted bias. Even worse are systematic allocations; they can only remove bias from known causes, and immediately raise red flags under the slightest scrutiny.

The Problem of Undesired Assignments

Even with a fully random treatment allocation procedure, we might end up with an undesirable allocation. For our example, the treatment group of kit A might—just by chance—contain mice that are all bigger or more active than those in the other treatment group. Statistical orthodoxy recommends using the design nevertheless, because only full randomization guarantees valid estimates of residual variance and unbiased estimates of effects. This argument, however, concerns the long-run properties of the procedure and seems of little help in this specific situation. Why should we care if the randomization yields correct estimates under replication of the experiment, if the particular experiment is jeopardized?

Another solution is to create a list of all possible allocations that we would accept and randomly choose one of these allocations for our experiment. The analysis should then reflect this restriction in the possible randomizations, which often renders this approach difficult to implement.

The most pragmatic method is to reject highly undesirable designs and compute a new randomization ( Cox 1958 ) . Undesirable allocations are unlikely to arise for large sample sizes, and we might accept a small bias in estimation for small sample sizes, when uncertainty in the estimated treatment effect is already high. In this approach, whenever we reject a particular outcome, we must also be willing to reject the outcome if we permute the treatment level labels. If we reject eight big and two small mice for kit A, then we must also reject two big and eight small mice. We must also be transparent and report a rejected allocation, so that critics may come to their own conclusions about potential biases and their remedies.

1.5.2 Blinding

Bias in treatment comparisons is also introduced if treatment allocation is random, but responses cannot be measured entirely objectively, or if knowledge of the assigned treatment affects the response. In clinical trials, for example, patients might react differently when they know to be on a placebo treatment, an effect known as cognitive bias . In animal experiments, caretakers might report more abnormal behavior for animals on a more severe treatment. Cognitive bias can be eliminated by concealing the treatment allocation from technicians or participants of a clinical trial, a technique called single-blinding .

If response measures are partially based on professional judgement (such as a clinical scale), patient or physician might unconsciously report lower scores for a placebo treatment, a phenomenon known as observer bias . Its removal requires double blinding , where treatment allocations are additionally concealed from the experimentalist.

Blinding requires randomized treatment allocation to begin with and substantial effort might be needed to implement it. Drug companies, for example, have to go to great lengths to ensure that a placebo looks, tastes, and feels similar enough to the actual drug. Additionally, blinding is often done by coding the treatment conditions and samples, and effect sizes and statistical significance are calculated before the code is revealed.

In clinical trials, double-blinding creates a conflict of interest. The attending physicians do not know which patient received which treatment, and thus accumulation of side-effects cannot be linked to any treatment. For this reason, clinical trials have a data monitoring committee not involved in the final analysis, that performs intermediate analyses of efficacy and safety at predefined intervals. If severe problems are detected, the committee might recommend altering or aborting the trial. The same might happen if one treatment already shows overwhelming evidence of superiority, such that it becomes unethical to withhold this treatment from the other patients.

1.5.3 Analysis Plan and Registration

An often overlooked source of bias has been termed the researcher degrees of freedom or garden of forking paths in the data analysis. For any set of data, there are many different options for its analysis: some results might be considered outliers and discarded, assumptions are made on error distributions and appropriate test statistics, different covariates might be included into a regression model. Often, multiple hypotheses are investigated and tested, and analyses are done separately on various (overlapping) subgroups. Hypotheses formed after looking at the data require additional care in their interpretation; almost never will \(p\) -values for these ad hoc or post hoc hypotheses be statistically justifiable. Many different measured response variables invite fishing expeditions , where patterns in the data are sought without an underlying hypothesis. Only reporting those sub-analyses that gave ‘interesting’ findings invariably leads to biased conclusions and is called cherry-picking or \(p\) -hacking (or much less flattering names).

The statistical analysis is always part of a larger scientific argument and we should consider the necessary computations in relation to building our scientific argument about the interpretation of the data. In addition to the statistical calculations, this interpretation requires substantial subject-matter knowledge and includes (many) non-statistical arguments. Two quotes highlight that experiment and analysis are a means to an end and not the end in itself.

There is a boundary in data interpretation beyond which formulas and quantitative decision procedures do not go, where judgment and style enter. ( Abelson 1995 )

Often, perfectly reasonable people come to perfectly reasonable decisions or conclusions based on nonstatistical evidence. Statistical analysis is a tool with which we support reasoning. It is not a goal in itself. ( Bailar III 1981 )

There is often a grey area between exploiting researcher degrees of freedom to arrive at a desired conclusion, and creative yet informed analyses of data. One way to navigate this area is to distinguish between exploratory studies and confirmatory studies . The former have no clearly stated scientific question, but are used to generate interesting hypotheses by identifying potential associations or effects that are then further investigated. Conclusions from these studies are very tentative and must be reported honestly as such. In contrast, standards are much higher for confirmatory studies, which investigate a specific predefined scientific question. Analysis plans and pre-registration of an experiment are accepted means for demonstrating lack of bias due to researcher degrees of freedom, and separating primary from secondary analyses allows emphasizing the main goals of the study.

Analysis Plan

The analysis plan is written before conducting the experiment and details the measurands and estimands, the hypotheses to be tested together with a power and sample size calculation, a discussion of relevant effect sizes, detection and handling of outliers and missing data, as well as steps for data normalization such as transformations and baseline corrections. If a regression model is required, its factors and covariates are outlined. Particularly in biology, handling measurements below the limit of quantification and saturation effects require careful consideration.

In the context of clinical trials, the problem of estimands has become a recent focus of attention. An estimand is the target of a statistical estimation procedure, for example the true average difference in enzyme levels between the two preparation kits. A main problem in many studies are post-randomization events that can change the estimand, even if the estimation procedure remains the same. For example, if kit B fails to produce usable samples for measurement in five out of ten cases because the enzyme level was too low, while kit A could handle these enzyme levels perfectly fine, then this might severely exaggerate the observed difference between the two kits. Similar problems arise in drug trials, when some patients stop taking one of the drugs due to side-effects or other complications.

Registration

Registration of experiments is an even more severe measure used in conjunction with an analysis plan and is becoming standard in clinical trials. Here, information about the trial, including the analysis plan, procedure to recruit patients, and stopping criteria, are registered in a public database. Publications based on the trial then refer to this registration, such that reviewers and readers can compare what the researchers intended to do and what they actually did. Similar portals for pre-clinical and translational research are also available.

1.6 Notes and Summary

The problem of measurements and measurands is further discussed for statistics in Hand ( 1996 ) and specifically for biological experiments in Coxon, Longstaff, and Burns ( 2019 ) . A general review of methods for handling missing data is Dong and Peng ( 2013 ) . The different roles of randomization are emphasized in Cox ( 2009 ) .

Two well-known reporting guidelines are the ARRIVE guidelines for animal research ( Kilkenny et al. 2010 ) and the CONSORT guidelines for clinical trials ( Moher et al. 2010 ) . Guidelines describing the minimal information required for reproducing experimental results have been developed for many types of experimental techniques, including microarrays (MIAME), RNA sequencing (MINSEQE), metabolomics (MSI) and proteomics (MIAPE) experiments; the FAIRSHARE initiative provides a more comprehensive collection ( Sansone et al. 2019 ) .

The problems of experimental design in animal experiments and particularly translation research are discussed in Couzin-Frankel ( 2013 ) . Multi-center studies are now considered for these investigations, and using a second laboratory already increases reproducibility substantially ( Richter et al. 2010 ; Richter 2017 ; Voelkl et al. 2018 ; Karp 2018 ) and allows standardizing the treatment effects ( Kafkafi et al. 2017 ) . First attempts are reported of using designs similar to clinical trials ( Llovera and Liesz 2016 ) . Exploratory-confirmatory research and external validity for animal studies is discussed in Kimmelman, Mogil, and Dirnagl ( 2014 ) and Pound and Ritskes-Hoitinga ( 2018 ) . Further information on pilot studies is found in Moore et al. ( 2011 ) , Sim ( 2019 ) , and Thabane et al. ( 2010 ) .

The deliberate use of statistical analyses and their interpretation for supporting a larger argument was called statistics as principled argument ( Abelson 1995 ) . Employing useless statistical analysis without reference to the actual scientific question is surrogate science ( Gigerenzer and Marewski 2014 ) and adaptive thinking is integral to meaningful statistical analysis ( Gigerenzer 2002 ) .

In an experiment, the investigator has full control over the experimental conditions applied to the experiment material. The experimental design gives the logical structure of an experiment: the units describing the organization of the experimental material, the treatments and their allocation to units, and the response. Statistical design of experiments includes techniques to ensure internal validity of an experiment, and methods to make inference from experimental data efficient.

5.1 Experiment Basics

Learning objectives.

- Explain what an experiment is and recognize examples of studies that are experiments and studies that are not experiments.

- Distinguish between the manipulation of the independent variable and control of extraneous variables and explain the importance of each.

- Recognize examples of confounding variables and explain how they affect the internal validity of a study.

What Is an Experiment?